Bayes’ Theorem is a fundamental theorem in probability theory that describes the relationship between conditional probabilities. It is named after the English mathematician Thomas Bayes, who first introduced the concept in the 18th century.

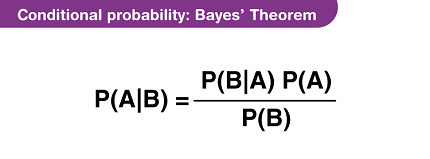

Bayes’ Theorem states that the probability of an event A, given that event B has occurred, is equal to the probability of event B given that event A has occurred, multiplied by the prior probability of event A, divided by the prior probability of event B. Mathematically, this can be expressed as:

P(A|B) = P(B|A) x P(A) / P(B)

where P(A) is the prior probability of event A, P(B) is the prior probability of event B, P(B|A) is the conditional probability of event B given that event A has occurred, and P(A|B) is the conditional probability of event A given that event B has occurred.

Bayes’ Theorem is widely used in statistics, particularly in Bayesian inference, which is a statistical method for estimating parameters of a model based on observed data. It is also used in machine learning, artificial intelligence, and many other fields.

What is Required Bayes Theorem

Bayes’ Theorem is a powerful tool in probability and statistics that allows us to update our beliefs about the likelihood of an event occurring based on new information. To apply Bayes’ Theorem, we need to have the following information:

- Prior probability: This is the probability of the event occurring before any new information is taken into account. It is often based on historical data or prior knowledge.

- Likelihood: This is the probability of the new information given that the event has occurred. It describes how likely the new evidence is to be observed if the event has actually occurred.

- Marginal probability: This is the probability of observing the new evidence, regardless of whether the event has occurred or not. It is often calculated as the sum or integral of the joint probability over all possible values of the event.

With these pieces of information, we can use Bayes’ Theorem to calculate the posterior probability, which is the updated probability of the event occurring given the new information. This allows us to make more informed decisions and predictions based on the available evidence.

Who is Required Bayes Theorem

Bayes’ Theorem is a fundamental concept in probability theory and statistics that is useful for anyone who wants to make decisions or predictions based on uncertain information. It is used in a wide range of fields, including:

- Machine learning and artificial intelligence: Bayes’ Theorem is used in Bayesian inference, a statistical technique for estimating the parameters of a model based on observed data.

- Epidemiology: Bayes’ Theorem is used in disease surveillance to estimate the probability of a disease outbreak based on observed cases.

- Finance: Bayes’ Theorem is used in risk assessment and portfolio optimization to estimate the probability of different investment outcomes.

- Law: Bayes’ Theorem is used in legal proceedings, such as DNA evidence and fingerprint analysis, to calculate the probability of a suspect being guilty based on the available evidence.

- Engineering: Bayes’ Theorem is used in reliability engineering to estimate the probability of failure of a system or component based on past performance data.

In summary, anyone who needs to make decisions based on uncertain information or wants to estimate the likelihood of an event occurring based on available evidence can benefit from understanding and applying Bayes’ Theorem.

When is Required Bayes Theorem

Bayes’ Theorem is a useful tool in probability and statistics for making decisions or predictions based on uncertain information. It is typically used in situations where:

- There is a prior belief or probability about an event or hypothesis, but new evidence or data is available that may change that belief.

- The new evidence is uncertain or noisy, and there is a need to quantify the degree of uncertainty or confidence in the updated belief.

- There is a need to update a model or estimate the probability of an event occurring based on observed data.

Some specific examples of situations where Bayes’ Theorem may be used include:

- Medical diagnosis: Bayes’ Theorem can be used to estimate the probability of a disease given the presence of certain symptoms or test results.

- Spam filtering: Bayes’ Theorem can be used to classify emails as spam or non-spam based on the content of the message.

- Quality control: Bayes’ Theorem can be used to estimate the probability of a defective product based on a sample of production data.

- Financial modeling: Bayes’ Theorem can be used to estimate the probability of a stock price or other financial variable based on past performance data.

Overall, Bayes’ Theorem is a powerful and flexible tool that can be applied in a wide range of situations where there is uncertainty and a need to make decisions based on available evidence.

Where is Required Bayes Theorem

Bayes’ Theorem has many applications in various fields, and it can be used wherever there is a need to make decisions or predictions based on uncertain information. Some examples of where Bayes’ Theorem is used include:

- Science and research: Bayes’ Theorem is used in scientific research to estimate the probability of a hypothesis being true based on observed data. It is also used in Bayesian statistics to update probability distributions as new data becomes available.

- Engineering: Bayes’ Theorem is used in engineering to estimate the probability of failure of a system or component based on past performance data. It is also used in reliability engineering to optimize maintenance schedules.

- Finance: Bayes’ Theorem is used in finance to estimate the probability of different investment outcomes and to manage risks in investment portfolios.

- Medical diagnosis and treatment: Bayes’ Theorem is used in medicine to estimate the probability of a disease given certain symptoms or test results. It is also used in personalized medicine to estimate the probability of a patient responding to a particular treatment.

- Law and forensics: Bayes’ Theorem is used in legal proceedings, such as DNA evidence and fingerprint analysis, to calculate the probability of a suspect being guilty based on the available evidence.

Overall, Bayes’ Theorem is a widely applicable tool that can be used in many fields where there is a need to make decisions or predictions based on uncertain information.

How is Required Bayes Theorem

Bayes’ Theorem is a mathematical formula that allows us to update our beliefs or probability estimates about an event or hypothesis based on new evidence or data. The formula is:

P(A|B) = P(B|A) * P(A) / P(B)

where:

- P(A|B) is the posterior probability, which is the updated probability of A given B

- P(B|A) is the likelihood, which is the probability of observing B given that A is true

- P(A) is the prior probability, which is the probability of A before observing B

- P(B) is the marginal probability, which is the probability of observing B regardless of whether A is true or not.

To apply Bayes’ Theorem, we first estimate the prior probability of the event or hypothesis based on historical data or prior knowledge. Then, we calculate the likelihood of observing the new evidence given that the event or hypothesis is true. We also calculate the marginal probability of observing the evidence regardless of whether the event or hypothesis is true. Finally, we use these values to update the prior probability to obtain the posterior probability.

Bayes’ Theorem is a powerful tool that allows us to incorporate new evidence into our probability estimates and update our beliefs based on the available information. It is widely used in probability and statistics, as well as in many other fields such as machine learning, finance, and medicine.

Case Study on Bayes Theorem

Case Study: Medical Diagnosis Using Bayes’ Theorem

Bayes’ Theorem is widely used in medical diagnosis, particularly in situations where there is uncertainty in the diagnosis or when the diagnostic tests are not perfectly accurate. In this case study, we will consider an example of how Bayes’ Theorem can be used in medical diagnosis.

Suppose a patient comes to a clinic with a fever, cough, and fatigue. The doctor suspects that the patient may have either the flu or a bacterial infection, such as pneumonia. The doctor decides to perform a test for the flu and a chest X-ray to check for pneumonia.

The flu test has a sensitivity of 90% and a specificity of 95%. This means that if a patient has the flu, the test will correctly identify it 90% of the time, and if a patient does not have the flu, the test will correctly identify it 95% of the time. The chest X-ray has a sensitivity of 80% and a specificity of 90%.

Before performing any tests, the doctor estimates that the prior probability of the patient having the flu is 20% and the prior probability of the patient having pneumonia is 15%. The prior probability of the patient having neither condition is 65%.

Using Bayes’ Theorem, we can calculate the posterior probability of the patient having the flu or pneumonia based on the results of the tests.

First, let’s calculate the likelihoods:

- The likelihood of a positive flu test given that the patient has the flu is 0.9.

- The likelihood of a positive flu test given that the patient does not have the flu is 0.05.

- The likelihood of a positive chest X-ray given that the patient has pneumonia is 0.8.

- The likelihood of a positive chest X-ray given that the patient does not have pneumonia is 0.1.

Next, we can calculate the marginal probabilities:

- The probability of a positive flu test is the probability that the patient has the flu multiplied by the likelihood of a positive test given the patient has the flu, plus the probability that the patient does not have the flu multiplied by the likelihood of a positive test given the patient does not have the flu: P(positive flu test) = (0.2 * 0.9) + (0.8 * 0.05) = 0.17

- The probability of a positive chest X-ray is the probability that the patient has pneumonia multiplied by the likelihood of a positive X-ray given the patient has pneumonia, plus the probability that the patient does not have pneumonia multiplied by the likelihood of a positive X-ray given the patient does not have pneumonia: P(positive chest X-ray) = (0.15 * 0.8) + (0.85 * 0.1) = 0.19

Now we can use Bayes’ Theorem to calculate the posterior probabilities:

- The posterior probability of the patient having the flu given a positive flu test is: P(flu|positive flu test) = P(positive flu test|flu) * P(flu) / P(positive flu test) = (0.9 * 0.2) / 0.17 = 0.94

- The posterior probability of the patient having pneumonia given a positive chest X-ray is: P(pneumonia|positive chest X-ray) = P(positive chest X-ray|pneumonia) * P(pneumonia) / P(positive chest X-ray) = (0.8 * 0.15) / 0.19 = 0.63

Based on these calculations, the doctor can now update their diagnosis and determine the most appropriate course of treatment. In this case, the patient is more

White paper on Bayes Theorem

Bayes’ Theorem: A Comprehensive Introduction

Introduction Bayes’ Theorem is a mathematical formula used to update the probability of an event or hypothesis based on new evidence or data. It is a powerful tool that has applications in a wide range of fields, including probability and statistics, machine learning, finance, and medicine. In this white paper, we provide a comprehensive introduction to Bayes’ Theorem, including its history, basic concepts, and applications.

History Bayes’ Theorem is named after Reverend Thomas Bayes, an English statistician and Presbyterian minister who first formulated the theorem in the mid-18th century. However, Bayes’ original work was not widely known or appreciated during his lifetime, and it was only after his death that his friend Richard Price discovered Bayes’ unpublished manuscript and published it in the Philosophical Transactions of the Royal Society of London in 1763.

The theorem was subsequently developed and refined by other mathematicians and statisticians, including Pierre-Simon Laplace, who introduced the concept of prior probabilities, and Harold Jeffreys, who developed a Bayesian approach to statistical inference.

Basic Concepts Bayes’ Theorem is based on the idea of conditional probability, which is the probability of an event occurring given that another event has occurred. The formula for conditional probability is:

P(A|B) = P(A and B) / P(B)

where P(A and B) is the probability of both A and B occurring, and P(B) is the probability of B occurring.

Bayes’ Theorem is a way to update our beliefs or probability estimates about an event or hypothesis based on new evidence or data. The formula is:

P(A|B) = P(B|A) * P(A) / P(B)

where P(A|B) is the posterior probability, which is the updated probability of A given B, P(B|A) is the likelihood, which is the probability of observing B given that A is true, P(A) is the prior probability, which is the probability of A before observing B, and P(B) is the marginal probability, which is the probability of observing B regardless of whether A is true or not.

Applications Bayes’ Theorem has many applications in probability and statistics, as well as in other fields. Some common applications include:

- Medical Diagnosis: Bayes’ Theorem can be used to update the probability of a disease based on the results of diagnostic tests.

- Spam Filtering: Bayes’ Theorem can be used to classify emails as either spam or not spam based on the content of the email.

- Stock Market Analysis: Bayes’ Theorem can be used to update the probability of a stock price change based on new information about the company or the market.

- Machine Learning: Bayes’ Theorem is the basis for Bayesian inference, a statistical technique used in many machine learning algorithms.

Conclusion Bayes’ Theorem is a powerful and versatile tool that allows us to update our beliefs or probability estimates based on new evidence or data. It has many applications in probability and statistics, as well as in other fields such as machine learning, finance, and medicine. By understanding the basic concepts of Bayes’ Theorem and its applications, we can make more informed decisions and predictions in a wide range of situations.