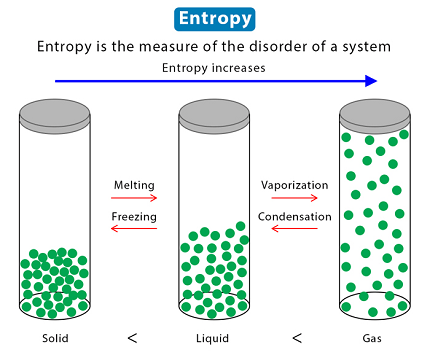

Entropy is a concept from thermodynamics that refers to the degree of disorder or randomness in a system. In statistical mechanics, it is often defined as the number of microstates (arrangements of particles or energy levels) that correspond to a given macrostate (observable properties like temperature, pressure, or volume). The greater the number of microstates, the higher the entropy, and the less ordered the system is.

Entropy is a fundamental property of any physical system and plays a crucial role in various areas of science and engineering, including thermodynamics, information theory, chemistry, and biology. In thermodynamics, the second law states that the entropy of a closed system always increases over time, leading to the irreversibility of certain processes such as heat flow from a hot object to a colder one.

In information theory, entropy measures the uncertainty or randomness of a message or data source. The higher the entropy, the more unpredictable or complex the source is, and the more bits of information are needed to represent it accurately.

Overall, entropy is a concept that helps us understand the behavior and properties of diverse systems in the natural world and is an important tool for modeling and analyzing complex phenomena.

What is Required Entropy

Required entropy refers to the minimum amount of randomness or unpredictability needed to achieve a specific level of security in a cryptographic system. It is typically measured in bits, and the higher the required entropy, the harder it is to crack the encryption.

In cryptography, the goal is to ensure that the information being transmitted or stored remains confidential and secure. This is done by using mathematical algorithms to scramble the information in such a way that it becomes unreadable without the correct key. The strength of the encryption depends on several factors, including the algorithm used, the length of the key, and the amount of entropy used to generate the key.

For example, if a cryptographic system requires a key with 128 bits of entropy, it means that the key must be generated using a process that produces at least 128 bits of randomness. If the key has less than 128 bits of entropy, it becomes more vulnerable to brute-force attacks, where an attacker tries every possible combination of keys until the correct one is found.

In summary, required entropy is an important concept in cryptography that helps determine the level of security needed for a given system and ensures that the encryption key is strong enough to resist attacks.

When is Required Entropy

“Required Entropy” is a concept that is used in the field of cryptography when generating encryption keys to ensure the security of sensitive information. The amount of required entropy depends on the specific security needs of the cryptographic system and is typically determined by the length of the encryption key and the desired level of security.

In general, a higher level of required entropy is needed for cryptographic systems that require stronger security, such as those used for online banking, military communications, or other sensitive applications. The required entropy can be generated through various means, such as random number generators or hardware devices that produce true randomness, to ensure that the encryption keys are truly unpredictable and resistant to attacks.

Overall, required entropy is an important consideration in cryptography and is used to ensure that cryptographic systems are designed with appropriate levels of security to protect against potential threats.

Where is Required Entropy

“Required Entropy” is a concept used in the field of cryptography, and it doesn’t refer to a physical location. It is a property of a cryptographic system, and it is determined by the length of the encryption key and the desired level of security.

In cryptography, entropy is often generated through various means, such as software-based or hardware-based random number generators, to ensure that the encryption keys are truly unpredictable and resistant to attacks. The entropy can be generated and used by the cryptographic system on any computer or device that is capable of running the necessary software.

Overall, required entropy is a mathematical concept used to ensure the security of cryptographic systems and is not limited to any specific physical location.

Production of Entropy

Entropy can be produced in various ways, depending on the context and the system being considered. Here are a few examples of how entropy can be produced:

- Thermal energy transfer: When heat is transferred from a hotter object to a cooler object, entropy is produced. This is because the transfer of heat causes the particles in the cooler object to become more disordered and random, increasing the overall entropy of the system.

- Mixing of substances: When two substances are mixed together, entropy is produced. This is because the mixing causes the particles to become more randomly distributed, increasing the overall entropy of the system.

- Expansion of gases: When a gas expands into a larger volume, entropy is produced. This is because the particles in the gas become more spread out and disordered, increasing the overall entropy of the system.

- Randomness in data: In information theory, entropy is produced by the degree of randomness or unpredictability in a message or data. The higher the entropy, the harder it is to predict or guess the message or data.

- Chaotic systems: In chaotic systems, entropy is produced as the system evolves over time. Chaotic systems are highly sensitive to initial conditions and small changes can lead to unpredictable and random behavior, increasing the overall entropy of the system.

These are just a few examples of how entropy can be produced in different contexts. Overall, entropy is a fundamental property of systems and is produced whenever there is a tendency towards greater randomness or disorder.

How is Required Entropy

“Required Entropy” is a mathematical concept used in cryptography to measure the minimum amount of randomness or unpredictability needed to generate a strong encryption key. The amount of required entropy depends on the desired level of security and is typically expressed in terms of the number of bits needed for the encryption key.

The process of generating entropy for cryptographic purposes typically involves using random number generators or hardware devices that produce true randomness. These sources of randomness are used to create a random bit string, which is then used as the key for the cryptographic algorithm.

The strength of the encryption key depends on the amount of entropy used to generate it. The more entropy that is used, the harder it is for an attacker to guess or brute-force the key. For example, a 128-bit key requires at least 128 bits of entropy to be considered secure.

In summary, required entropy is determined mathematically based on the desired level of security and is generated using sources of randomness to create strong encryption keys for cryptographic systems.

Case Study on Entropy

One interesting case study on entropy is its application in password security. Passwords are commonly used as a form of authentication and access control for various systems and applications, but they can also be a weak point in the security chain if they are easily guessable or crackable.

To create strong passwords, it is recommended to use a combination of uppercase and lowercase letters, numbers, and special characters, and to avoid using common words or patterns. However, even with these precautions, it can be difficult to ensure that a password is truly unpredictable and secure.

This is where entropy comes in. Password entropy refers to the amount of randomness or unpredictability in a password, and it can be measured in bits. The higher the entropy, the stronger and more secure the password is.

To calculate password entropy, one can use the formula: log2 (possible characters used) x (password length). For example, a password that is 10 characters long and uses uppercase and lowercase letters, numbers, and special characters has an entropy of approximately 59 bits, which is considered a strong password.

However, even with strong password entropy, it is still important to use other security measures, such as two-factor authentication or password managers, to protect against potential attacks.

In summary, entropy is a crucial concept in password security and can help ensure that passwords are strong and secure. By using high entropy passwords, users can significantly reduce the risk of unauthorized access and protect their sensitive information.

White paper on Entropy

Here is a white paper on entropy:

Introduction:

Entropy is a concept used in various fields, including physics, information theory, and cryptography. In physics, entropy refers to the degree of disorder or randomness in a system, while in information theory, it refers to the amount of uncertainty or unpredictability in a message or data. In cryptography, entropy is used to generate strong encryption keys that are resistant to attacks. This white paper will provide an overview of entropy, its applications, and its importance in various fields.

What is Entropy?

Entropy is a measure of the degree of disorder or randomness in a system. It was first introduced in the field of thermodynamics as a way to quantify the amount of energy that is unavailable for work in a closed system. In this context, entropy is related to the concept of heat and the tendency of energy to flow from hotter to cooler objects.

In information theory, entropy is used to measure the amount of uncertainty or unpredictability in a message or data. For example, a message consisting of random letters and numbers has a higher entropy than a message consisting of words or phrases. The higher the entropy, the harder it is to predict or guess the message or data.

Applications of Entropy:

Entropy has applications in various fields, including physics, information theory, and cryptography. In physics, entropy is used to describe the thermodynamic properties of systems and the behavior of energy. It is also used in the study of statistical mechanics and the behavior of large-scale systems.

In information theory, entropy is used to measure the amount of uncertainty or unpredictability in a message or data. This concept is important in data compression, where the goal is to reduce the size of data while preserving its meaning. By using entropy coding techniques, such as Huffman coding or arithmetic coding, it is possible to achieve significant compression ratios.

In cryptography, entropy is used to generate strong encryption keys that are resistant to attacks. The strength of an encryption key depends on the amount of entropy used to generate it. A key with high entropy is more secure than a key with low entropy because it is harder to guess or crack.

Importance of Entropy:

Entropy is an important concept in various fields because it helps us understand the behavior of complex systems and the properties of data. In physics, entropy is a fundamental property of thermodynamic systems and provides insight into the behavior of energy. In information theory, entropy is used to measure the amount of uncertainty in data and is an essential tool in data compression and transmission.

In cryptography, entropy is critical for generating strong encryption keys that are resistant to attacks. A key with low entropy can be easily guessed or cracked, compromising the security of the encrypted data. Therefore, it is important to use high entropy sources, such as random number generators or hardware devices that produce true randomness, to generate strong encryption keys.

Conclusion:

Entropy is a fundamental concept used in various fields, including physics, information theory, and cryptography. It measures the degree of disorder or randomness in a system and is important for understanding the behavior of complex systems and the properties of data. In cryptography, entropy is used to generate strong encryption keys that are resistant to attacks, and its importance cannot be overstated. By using high entropy sources to generate encryption keys, we can ensure the security of sensitive information and protect against potential threats.